LLM Code Generation: A Reality Check from the Trenches

After using GPT-4, Claude, and Copilot daily for months, here's what actually works and what doesn't in real production environments.

The Hype vs Reality

We've all seen the viral demos: developers typing a comment and watching entire functions materialize. Product managers dreaming of 10x productivity gains. VCs predicting the end of traditional software engineering. But after integrating LLMs into my daily workflow for the past year, I've learned the reality is far more nuanced.

What Actually Works

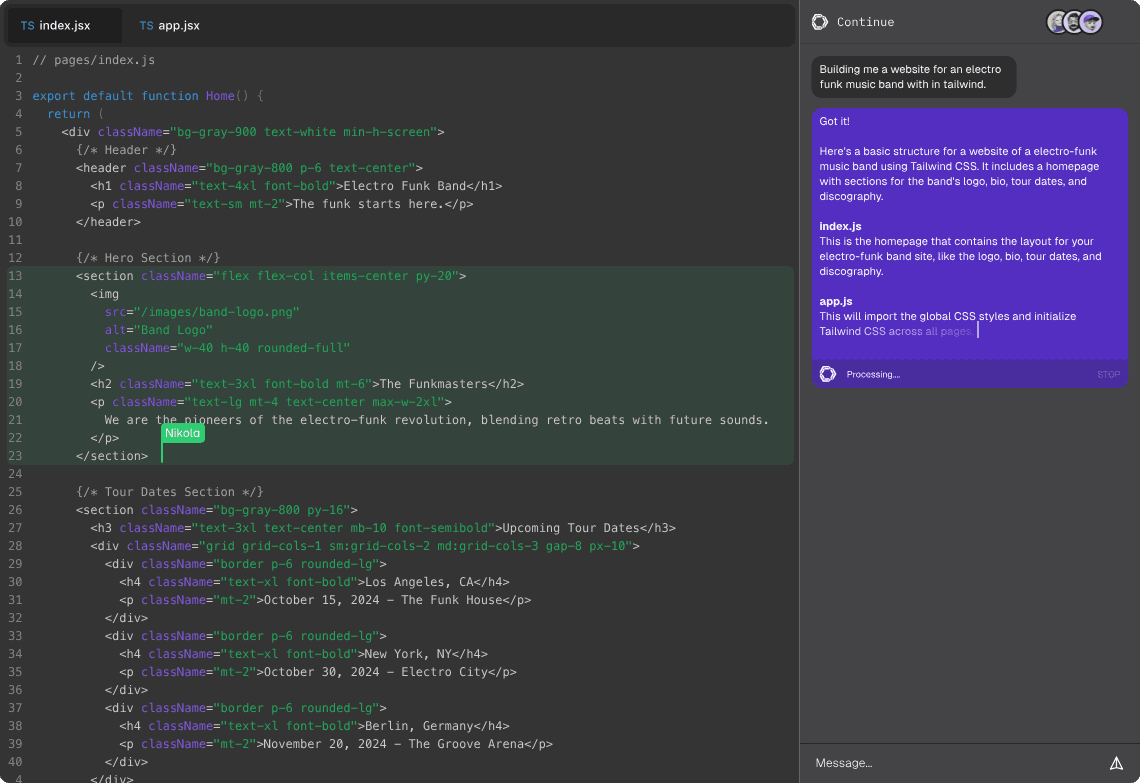

Boilerplate and Scaffolding

This is where LLMs genuinely shine. Need a new React component with TypeScript, prop validation, and error boundaries? LLMs can generate it in seconds. Setting up a new API endpoint with proper validation, error handling, and documentation? Done. The time savings here are real and significant.

Syntax Translation

Converting code between languages or frameworks is another strong use case. I've used Claude to translate Python data processing scripts to TypeScript, convert class components to hooks, and migrate between different ORMs. It's not perfect, but it handles 80% of the mechanical translation work.

Documentation and Comments

Writing clear documentation is tedious but essential. LLMs are surprisingly good at generating JSDoc comments, README files, and inline explanations. They understand context well enough to document what your code actually does, not just parrot obvious information.

What Doesn't Work (Yet)

Complex Business Logic

This is where the limitations become obvious. Try asking an LLM to implement a non-trivial algorithm with specific performance requirements, edge cases, and domain constraints. You'll get something that looks plausible but often has subtle bugs, misunderstands requirements, or makes inefficient choices.

LLMs lack true understanding of your codebase's architecture, your team's conventions, and the broader system context. They can't reason about why certain patterns exist or when to break established conventions.

Debugging Existing Code

When production breaks at 3 AM, LLMs are rarely helpful beyond suggesting obvious fixes. They can't trace through complex state interactions, understand subtle race conditions, or diagnose issues that span multiple services. The best debugging still requires deep system knowledge that LLMs don't possess.

Performance Optimization

LLMs will happily generate code with O(n²) complexity when O(n) is achievable. They don't reason about memory allocation patterns, cache coherency, or other performance considerations. If you need optimized code, you're still writing it yourself or heavily modifying LLM output.

The Productivity Paradox

Here's the uncomfortable truth: LLMs make you faster at writing code but not necessarily at shipping features. The bottleneck in software development has never been typing speed—it's understanding requirements, making architectural decisions, and managing complexity.

I can generate a component in 30 seconds instead of 5 minutes, but I still spend hours thinking about state management, error handling, accessibility, and how it fits into the larger system. The cognitive work hasn't changed.

Best Practices I've Learned

1. Treat LLM output as draft zero. Never merge generated code without understanding it completely. The code might work but violate important patterns or introduce subtle issues.

2. Use them for exploration. LLMs are excellent for prototyping different approaches. Ask them to show you three ways to solve a problem, then pick the best one and refine it.

3. Provide maximum context. The more context you give—file snippets, error messages, type definitions—the better the output. Vague prompts get vague results.

4. Know when to stop. If you're on your fifth iteration of prompting and the code still isn't right, it's faster to just write it yourself.

The Bottom Line

LLMs are powerful tools that genuinely improve developer productivity, but they're not replacing software engineers anytime soon. They're excellent junior pair programmers—good at mechanical tasks, helpful for exploration, but they still need senior oversight.

The developers winning with LLMs aren't the ones trying to automate their jobs away. They're the ones who understand both the capabilities and limitations, using AI to eliminate grunt work while focusing their own expertise on the hard problems that actually matter.

That's the reality from the trenches. Less revolutionary than the hype, but more useful than the skeptics admit.